The Laboratory Experiment: Why Your Resistance is Their Research

An analysis of behavioral surveillance and the weaponization of dissent

The Wake-Up Call

For eighteen months, I've documented what I believed were failures in American democratic institutions. Last night's underground radio broadcast forced me to confront a more disturbing possibility: what if these aren't failures?

The transmission lasted ten minutes. The audio quality suggested analog equipment--vacuum tubes, manual mixing boards, the kind of setup that screams operational security over production value. The host identified herself only as working for "independent journalism," but the technical precision of her questions suggested someone with deep knowledge of surveillance systems. Her guest was Emerson Rake, CEO of RakeTech Industries, responsible for approximately 60% of America's domestic monitoring infrastructure.

What Rake admitted during that broadcast should terrify anyone who believes resistance movements can operate using traditional security methods.

I've spent the last six hours cross-referencing Rake's statements with public records, corporate filings, and traffic analytics. The data tells a story I wish I could dismiss as paranoid speculation. Instead, it confirms every instinct I've developed over months of watching my readership patterns, analyzing my comment sections, and tracking the digital breadcrumbs that follow every piece I publish.

The truth is stark: they're not just watching us. They're farming us.

My Google Analytics dashboard currently shows 847 unique visitors from IP addresses registered to federal agencies. That's not unusual--government employees read independent media. What's unusual is the consistency. Same IP ranges, same browsing patterns, visiting within hours of publication for the past fourteen months. They're not casual readers checking in. They're systematic monitors maintaining regular surveillance.

But that's the mundane part. The sophisticated part revealed itself when I started correlating my traffic data with broader resistance activity patterns. Every time I publish surveillance analysis, my comments section fills with accounts promoting more extreme resistance tactics.

Every voting irregularity piece attracts new readers who share "direct action opportunities." The timing is too precise to be organic.

Rake's confession provides the framework for understanding what I've been observing:

"Every viewer, every reader, every subscriber gets flagged for enhanced monitoring. You're not building resistance—you're building our target database."

They've been using my blog as a honeypot.

Everyone who engages with my content--every person who shares my posts, every reader who signs up for my newsletter, every commenter who expresses agreement with my analysis--they're all being cataloged. My investigative work has become their recruitment tool. My audience has become their watchlist.

For eighteen months, I've inadvertently conducted surveillance operations against the people I'm trying to inform. Every data visualization I create teaches them which patterns we recognize. Every source I protect trains their systems to identify future whistleblowers. Every security recommendation becomes part of their behavioral prediction model.

I'm looking at my subscriber database right now--14,731 email addresses of people who trusted me with their contact information because they wanted to stay informed about threats to democracy. According to Rake's methodology, every single one of those people is now flagged as a potential domestic threat. Their crime was seeking factual information about their government.

This explains why every major resistance operation I've documented follows similar failure patterns. Why encrypted communications remain vulnerable despite technical improvements. Why grassroots organizing struggles with secure coordination despite implementing best practices.

We've been fighting a counterinsurgency operation designed by people who understand our tactics better than we do, because our tactics taught them everything they needed to know.

The personal cost sits like lead in my stomach. How many people have I endangered? How many sources have I compromised? How many readers have suffered consequences for engaging in good faith?

But the strategic implications are worse. If Rake's methods are standard across surveillance infrastructure, then every resistance network in America is potentially compromised.

We're not fighting against surveillance. We're feeding it.

The Portland Case Study--Behavioral Mapping in Action

Portland provides the perfect case study for understanding how modern surveillance defeats traditional resistance tactics. According to public records, the network operated for eight months before seventeen coordinated arrests dismantled the operation. What makes this instructive isn't the arrests —it's how they were achieved.

The Portland network followed textbook security protocols. Encrypted messaging through Signal and Telegram. Anonymous file sharing via Tor. Amateur radio coordination using frequency-hopping techniques. Decentralized cell structure with compartmentalized information. By conventional standards, they were practically invisible.

They were destroyed anyway.

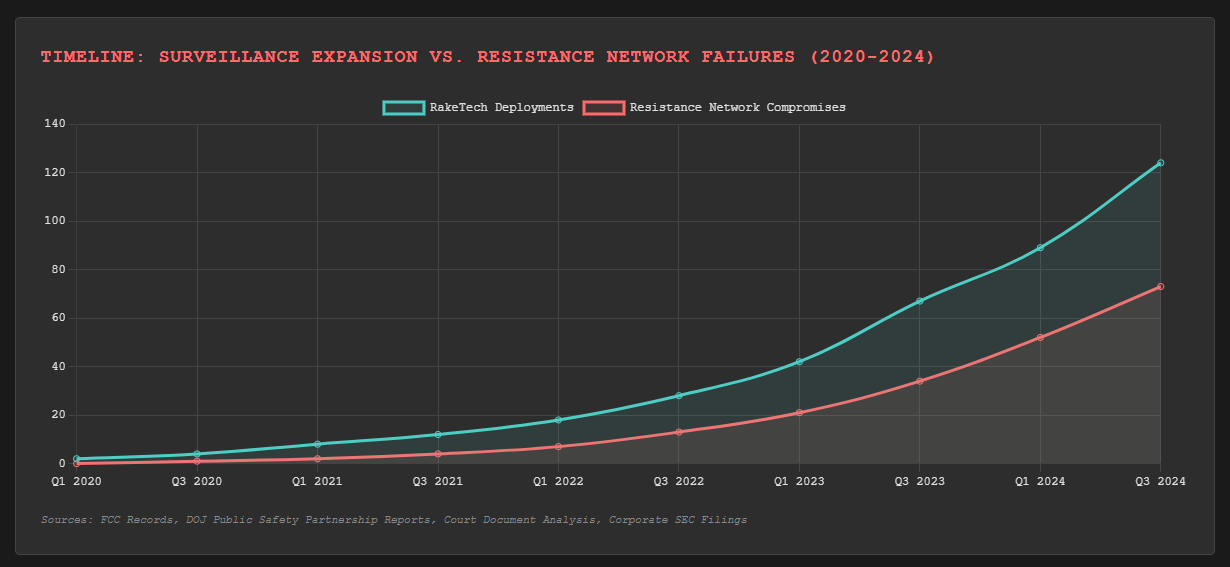

FCC records show RakeTech received special authorization to monitor "unlicensed radio interference" in the Portland metro area starting six months before the arrests. Court documents reveal the company provided "behavioral pattern analysis" to local law enforcement. Corporate filings show RakeTech received $2.3 million in consultation fees from Portland PD for "network topology mapping services."

The timeline tells the story: they weren't trying to break encryption or infiltrate communications. They were mapping behavior patterns.

Consider how this actually worked. The Portland network used encrypted messaging, but metadata remained visible—when messages were sent, how frequently, to which geographic clusters. They used anonymous file sharing, but download patterns revealed organizational structure. They practiced operational security, but regular meeting schedules created predictable movement signatures.

Most revealing was the amateur radio component. The network thought frequency-hopping provided security, but it actually created unique digital fingerprints. RakeTech's systems didn't need to decode transmissions—they analyzed transmission timing, duration, and geographic origin points. The technical sophistication became a liability because sophisticated patterns are easier to recognize than random ones.

This represents a fundamental shift in surveillance methodology. Traditional law enforcement targets communication content. Behavioral surveillance targets communication patterns. You can encrypt everything perfectly and still reveal your entire network structure through timing analysis.

The Portland case demonstrates what Rake meant about "classic resistance cell structure." These networks organize themselves according to established security principles—compartmentalization, redundant communication paths, distributed leadership. But those same principles create recognizable patterns for algorithmic analysis.

Pattern recognition systems excel at identifying organized behavior. They struggle with truly random activity. Portland's careful security protocols made them more identifiable, not less. Every precaution they took created another data point for behavioral modeling.

Court records show arrests occurred simultaneously across seventeen locations. No one was followed. No communications were intercepted. No informants were involved. The algorithm simply identified the network topology, predicted optimal intervention points, and recommended timing for maximum disruption.

This explains why encrypted communications consistently fail despite technical improvements. The encryption works perfectly. The vulnerability isn't in the technology—it's in human behavioral patterns that sophisticated technology makes easier to detect.

Portland's network included students, labor organizers, and community activists. Their crime was coordinating mutual aid during police brutality protests. Their methods were legal, their encryption was unbreakable, their security protocols were thorough.

None of that mattered.

What mattered was that they behaved like an organized network, and organized networks create patterns that algorithms can map.

The seventeen arrests weren't law enforcement victories—they were algorithm validation tests. Portland's resistance members became training data for systems now deployed nationwide. Their careful security protocols became templates for identifying similar networks in other cities.

Every future resistance operation now faces systems trained on Portland's data.

Conclusion

Portland's seventeen arrests represent more than law enforcement success—they demonstrate algorithmic validation. Every security protocol the network implemented became training data for systems now deployed nationwide. Their encryption worked perfectly. Their compartmentalization followed established principles. Their operational security exceeded historical standards.

None of that mattered because they were fighting the wrong war.

Traditional surveillance required breaking into networks. Behavioral surveillance requires networks to reveal themselves through the act of being networks. The more carefully Portland organized, the more clearly they appeared to systems designed to recognize organized behavior.

This isn't speculation about future threats—it's analysis of current operational reality. Every resistance network in America now faces algorithms trained on Portland's data.

Next Post: How the fundamental mathematics of pattern recognition have inverted the relationship between security and visibility, and why every conventional resistance tactic now actively feeds surveillance systems designed to destroy resistance networks.

—A. Parker

Still here. Still documenting. Still learning. Feed the birds.

[Comments disabled]

Important Notice

The American Autocracy Universe is a work of fiction created for educational and entertainment purposes. All organizations, characters, policies, and events described are entirely fictional and do not represent any real government agency, political organization, or individuals.

Any resemblance to actual government documents, policy proposals, or political strategies is purely coincidental and intended to illustrate historical patterns of authoritarian governance for educational purposes.

This content is protected speech under the First Amendment and is not intended to promote, encourage, or provide instruction for any illegal activities.

About the Creator

Greg Wolford is the creator of The American Autocracy Universe. This multimedia storytelling project spans blogs, podcasts, newsletters, and fiction to explore themes of resistance, documentation, and hope in the face of systematic oppression.

© 2025 Greg Wolford. Truth survives.

"Democracy dies in darkness. But it also dies in plain sight, when people stop paying attention."