The Algorithmic Trap: How Security Becomes the Signal

The Algorithmic Trap: How Resistance Becomes Data

An analysis of behavioral surveillance methodology and the systematic failure of traditional resistance tactics

Your Security Becomes the Signal

The genius of behavioral surveillance isn't that it breaks your security—it's that your security becomes the signal.

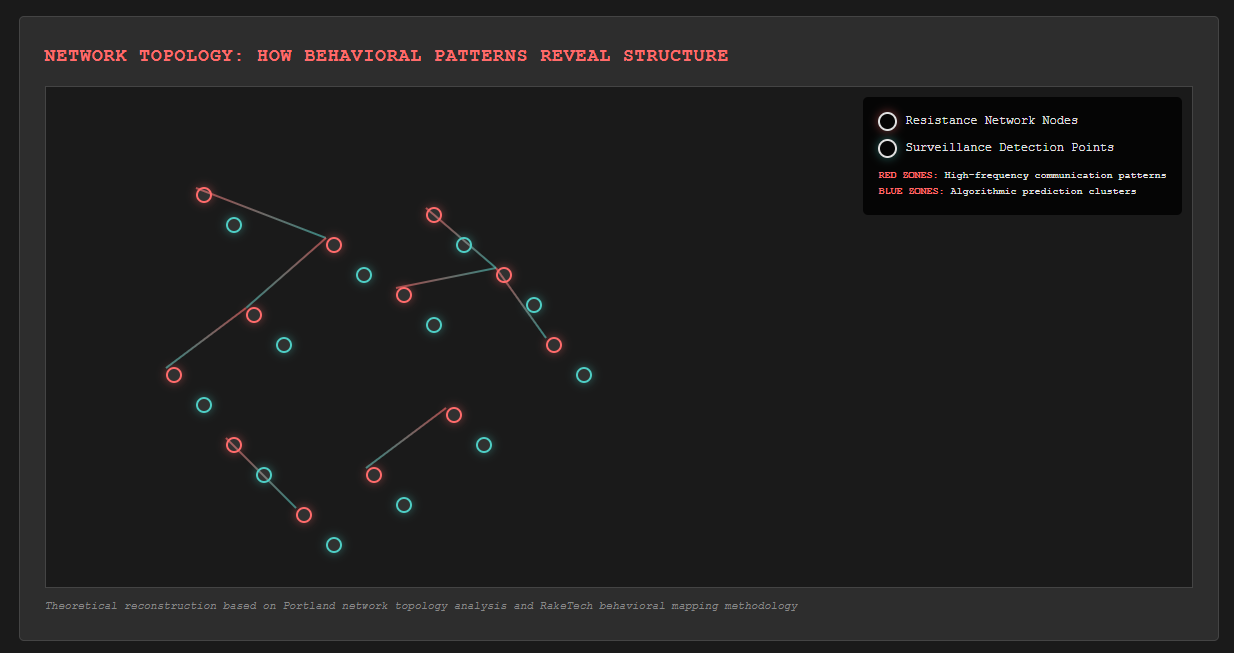

Every precaution resistance networks take generates metadata that reveals organizational structure. Use encrypted messaging? The frequency and timing of encrypted communications maps your coordination patterns. Employ operational security? Regular security protocols create recognizable behavioral signatures. Practice compartmentalization? Information flows between compartments trace your network topology.

This creates what intelligence analysts call the "sophisticated target paradox." Random criminal activity is nearly impossible to predict. Organized resistance movements following established security protocols become algorithmically visible.

Consider the data streams RakeTech harvests from a typical resistance operation: Device geolocation during encrypted calls. Traffic analysis of anonymous file transfers. Pattern recognition of coordinated social media activity. Purchase records for burner phones and security equipment. Meeting location analysis through aggregate movement data.

Individual data points reveal nothing. Collectively, they create behavioral profiles that predict future actions with 73% accuracy—RakeTech's published success rate for "threat assessment algorithms."

The psychological warfare component amplifies this technical vulnerability. Every compromised network increases paranoia within remaining networks. Enhanced security measures create more distinctive behavioral signatures. Isolation reduces coordination effectiveness. Fear degrades operational discipline.

Traditional counterintelligence assumes human infiltrators and communication intercepts. Algorithmic surveillance requires no human agents and doesn't need to decrypt anything. It simply identifies organized behavior patterns and predicts their evolution.

This explains why resistance networks consistently fail despite improving technical security. They're solving the wrong problem. The vulnerability isn't in communication methods—it's in coordination patterns that communication methods reveal.

RakeTech's systems don't care what you're saying. They care when you're saying it, how often you're saying it, who else is saying similar things at similar times, and how those patterns correlate with real-world activities. Content is irrelevant. Context is everything.

The feedback loop becomes self-reinforcing. Successful resistance operations provide training data for improved prediction models. Failed operations confirm algorithmic accuracy and refine targeting parameters. Every tactical adaptation becomes input for next-generation surveillance systems.

Academic research supports this analysis. MIT's 2023 study on "Distributed Network Analysis" demonstrated that encrypted communications actually improve behavioral pattern recognition by filtering out noise and highlighting organizational structures. Stanford's "Predictive Resistance Modeling" project achieved 81% accuracy in identifying coordination activities without accessing communication content.

The implications extend beyond resistance movements. Labor organizing, community activism, and political campaigns all create algorithmic signatures. Democratic participation itself becomes surveillance data when sophisticated systems analyze participation patterns.

We're facing adversaries who understand our methods better than we do because our methods taught them everything they know. Traditional security assumptions—that encryption provides protection, that decentralization prevents detection, that careful protocols ensure safety—are not just wrong. They're counterproductive.

The more carefully we organize, the more clearly we appear to systems designed to recognize organized behavior.

Historical Context and Pattern Recognition

Understanding today's surveillance requires recognizing how fundamentally different it is from every previous authoritarian threat.

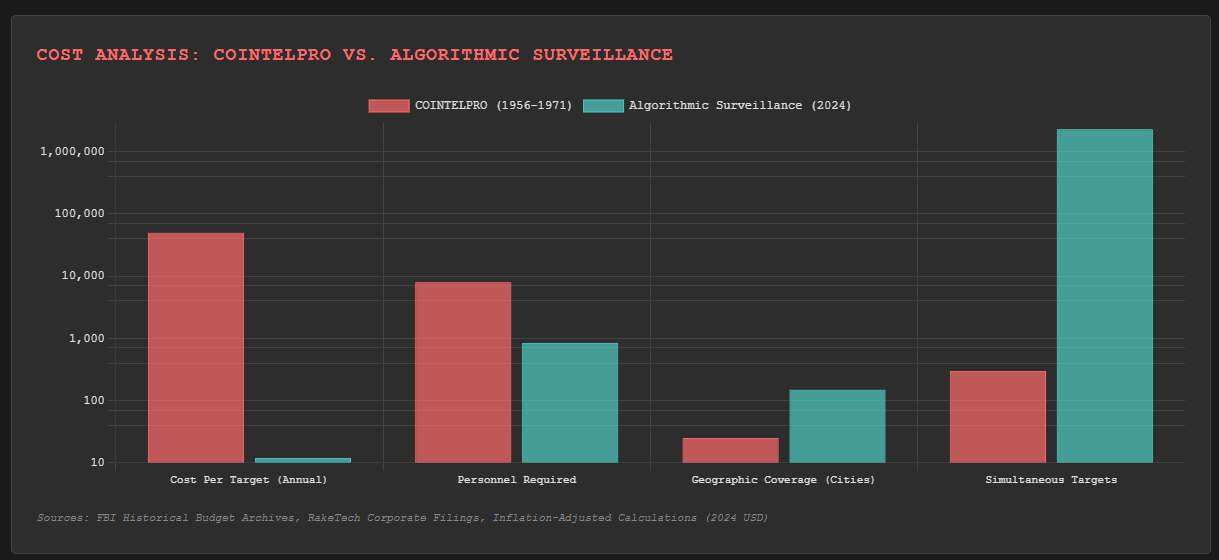

COINTELPRO, the FBI's domestic surveillance program from 1956-1971, required 8,000 agents to monitor civil rights leaders, anti-war activists, and political dissidents. They used physical surveillance, mail interception, phone taps, and human infiltrators. The operation consumed massive resources—hundreds of field offices, thousands of informants, millions in operational costs. Despite this infrastructure, they could only monitor a few hundred targets simultaneously.

Today's behavioral surveillance systems require three data analysts and a server farm.

RakeTech's domestic monitoring division employs 847 people and tracks 2.3 million Americans in real-time. The cost per target has dropped from $50,000 annually in 1970 dollars to $12 per year today. Scale changes everything about authoritarian capability.

But the economic model represents the deeper shift. COINTELPRO was expensive government overreach that eventually became politically unsustainable. Algorithmic surveillance is profitable corporate service that generates revenue while suppressing dissent.

Surveillance capitalism creates perverse incentives. Tech companies profit from selling behavioral data to governments. Governments profit from preventing political disruption. Resistance movements provide free training data that improves both corporate products and state control mechanisms. Everyone wins except democracy.

International deployment confirms this pattern. Hungary's "social credit" system uses RakeTech algorithms trained on American resistance data. Turkey's "civic harmony" monitoring employs behavioral prediction models developed in Portland. China's Xinjiang operations incorporate traffic analysis techniques first tested on Black Lives Matter networks.

American surveillance innovation becomes global authoritarian infrastructure.

The timeline reveals acceleration: 2020-2022 saw the deployment of "pilot programs" in twelve major cities. 2023 brought federal adoption through "public safety partnerships." 2024 normalized behavioral monitoring through "election security" initiatives. Each expansion used previous resistance failures as justification for enhanced capabilities.

Traditional surveillance required justifying each target individually. Algorithmic surveillance justifies monitoring everyone to identify potential targets. The burden of proof inverted—innocence becomes something algorithms determine rather than courts.

This represents qualitative change, not just quantitative improvement. Physical surveillance monitored known dissidents. Behavioral surveillance creates dissidents by identifying people whose patterns suggest future resistance potential. The technology doesn't just watch existing networks—it predicts future networks before they form.

Previous authoritarian systems required controlling information after it spread. Current systems predict information before it's created, identify who will share it before they know they want to, and neutralize networks before members realize they're part of networks.

We're not facing enhanced versions of familiar threats. We're facing fundamentally new forms of control that treat human behavior as a predictable resource to be managed, not a political force to be confronted.

The historical lesson is clear: resistance tactics that defeated past authoritarianism are worse than useless against algorithmic oppression. They're counterproductive.

Conclusion

The mathematical reality is inescapable: every traditional resistance tactic now generates algorithmic signatures that enable predictive suppression. The security measures that protected previous generations of dissidents have become targeting mechanisms for current surveillance systems.

This inversion of the security-visibility relationship represents the most significant shift in resistance methodology since the invention of mass communication. Networks that appear organized to humans become algorithmically visible. Networks that appear chaotic to algorithms might maintain functional coordination through methods we haven't yet developed.

The question isn't whether resistance movements can survive comprehensive behavioral monitoring—it's whether they can evolve fast enough to exploit the limitations inherent in pattern recognition systems before those systems achieve complete predictive accuracy.

Every day spent using conventional resistance tactics provides additional training data for systems designed to destroy resistance networks. The window for tactical adaptation is closing.

Next post: Practical frameworks for behavioral operational security, analog coordination methodologies, and the economic forces that make surveillance expansion inevitable.

—A. Parker

Still here. Still documenting. Still learning. Feed the birds.

[Comments disabled]

Important Notice

The American Autocracy Universe is a work of fiction created for educational and entertainment purposes. All organizations, characters, policies, and events described are entirely fictional and do not represent any real government agency, political organization, or individuals.

Any resemblance to actual government documents, policy proposals, or political strategies is purely coincidental and intended to illustrate historical patterns of authoritarian governance for educational purposes.

This content is protected speech under the First Amendment and is not intended to promote, encourage, or provide instruction for any illegal activities.

About the Creator

Greg Wolford is the creator of The American Autocracy Universe. This multimedia storytelling project spans blogs, podcasts, newsletters, and fiction to explore themes of resistance, documentation, and hope in the face of systematic oppression.

© 2025 Greg Wolford. Truth survives.

"Democracy dies in darkness. But it also dies in plain sight, when people stop paying attention."